Deciphering the requirements for ESSA evidence-based studies

School administrators use different sources of evidence to make decisions, including those about what education technology (edtech) product to use in their classrooms. These might include reviews of student performance indicators, teacher evaluations, parent and student feedback, budgetary and financial information, school climate and culture data, and program evaluations. Administrators know that not all evidence is equal and evidence comes in different shapes and sizes–but some sources of evidence carry more weight than others.

The Every Student Succeeds Act (ESSA) provides district leaders with useful guidance on what research is needed for a product to be evidence-based. ESSA was enacted in 2015, and designed to encourage the use of evidence-based interventions in K-12 school systems. Policymakers recognized that evidence exists on a continuum of rigor and innovation is necessary for progress.With that in mind, they laid out four tiers of evidence, with the first tier being the most rigorous: (1) Strong, (2) Moderate, (3) Promising, and (4) Demonstrates a Rationale. These tiers provide a framework that school administrators can use to determine how confident they can be in a products’ potential impacts for their students.

So, who assigns these evidence tiers to an evaluation and how are they determined? There are several independent and university-affiliated research outfits across the country that design and/or validate studies, including LearnPlatform by Instructure! We have a research team of What Works Clearinghouse-certified (WWC) reviewers and subject matter experts that design and validate studies for learning solutions. Collectively, the team possesses over 70 years of experience in education research and preK-16 program evaluation. Our interdisciplinary team includes ten researchers with diverse and advanced degrees in developmental psychology, educational psychology, educational research and evaluation, educational research methods, industrial and organizational psychology, mathematics education, sociology, and teacher education and learning sciences. Our team members are certified in the WWC Group Design Standards and have extensive experience designing studies to meet WWC, ESSA, EDGAR, and SEER standards.

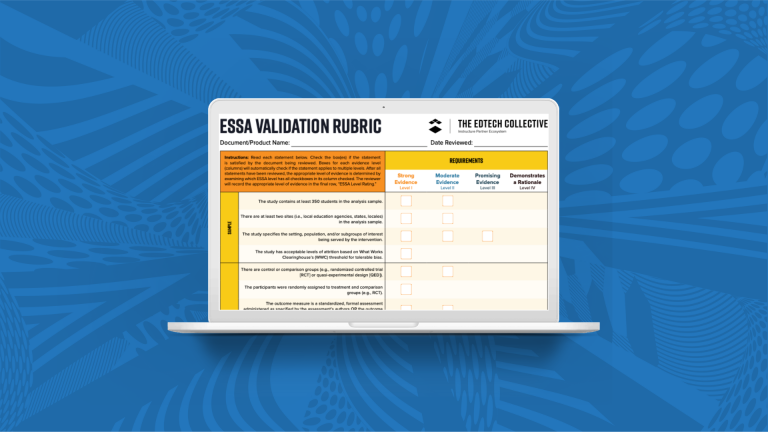

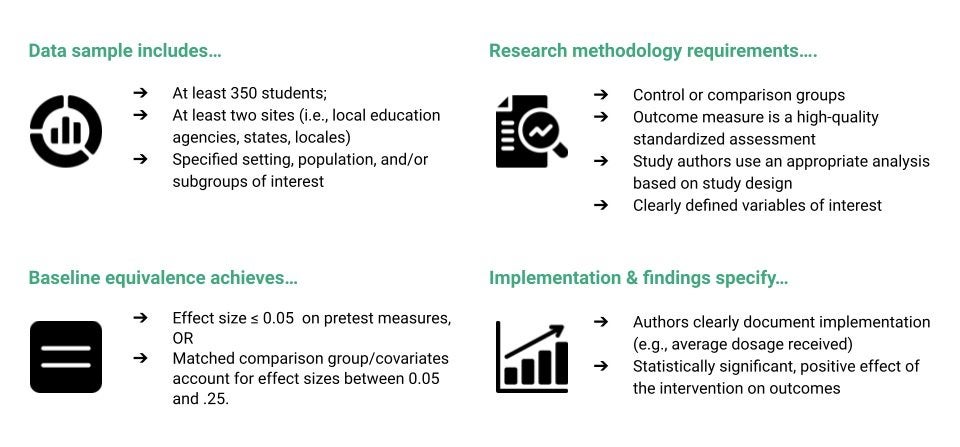

To determine the strength of a research design and effectiveness of a learning solution, LearnPlatform developed an ESSA Validation Rubric with five factors derived from ESSA regulatory and non-regulatory standards, EDGAR, SEER, and WWC guidance and standards: (1) sample, (2) methodology, (3) baseline equivalence, (4) implementation and findings, and (5) other. Trained reviewers independently and systematically evaluate a study against the rubric criteria. Researchers discuss their ratings and jointly conclude which level of evidence the study meets.

Zooming back out, what do the four different tiers mean for gauging the strength of a study for evidence-based decision-making?

-

An ESSA Tier IV study indicates that a learning solution is based on existing research in the field. This tier of evidence does not involve research on the learning solution itself but points to existing research to make the case that the solution should be effective. ESSA Level IV also includes a clear program logic model that describes how a solution should improve outcomes and the solution should have plans for an ESSA Level III or higher study.

-

An ESSA Tier III rating indicates promising evidence of effectiveness. ESSA Tier III studies are often* treatment-only, correlational studies which account for students prior performance and other demographic variables. Such studies cannot make any causal claims on the effectiveness of an edtech intervention and the results should be interpreted with caution when making decisions. Once again, reviewing the study context and participants may help an administrator determine whether or not the study was conducted in a setting that is similar to theirs, which may engender greater confidence.

-

An ESSA Tier II study translates to a moderately rigorous design that should be interpreted with the study setting and context in mind. An administrator can be fairly confident that the edtech intervention “works'' but that may not be a guarantee, since results can vary depending on the context and fidelity of implementation.

-

Lastly, the most rigorous design is an ESSA Tier I, which shows strong evidence of effectiveness. ESSA Tier I studies are well-designed and -conducted randomized control trials. Such studies can be expensive and time-consuming to conduct and are not as prevalent among the existing evidence base. Beyond cost and time barriers, many researchers argue about the ethics around withholding educational interventions from students (Berliner, 2002). No matter the tier (IV - I), the LearnPlatform ESSA badges and validation rubric were designed to provide transparency and demystify the ESSA tiers of evidence so that busy school administrators can make informed decisions.

At LearnPlatform by Instructure, we are proud to be a research-based organization, and we love pulling back the curtain on the nuances of evidence-based edtech. Check out our Evidence-as-a-Service subscriptions to learn more about how we work with edtech providers at all stages to build evidence.

For more insight on the four levels of ESSA Evidence, check out these Six Practical Tips for District Administrators to Use ESSA-Aligned Research Studies.

* Quasi-experimental studies that have smaller samples (n ≤ 350) and/or do not use standardized outcome measures as per WWC standards can receive an ESSA Tier III rating

Berliner, D. C. (2002). Comment: Educational research: The hardest science of all. Educational Researcher, 31(8), 18-20.

Related Content

Teaching-With-Tech-10-Benefits.jpg

Teaching-With-Tech-10-Benefits.jpgBlogs

edtech_selection.jpg

edtech_selection.jpgBlogs

untitled_design.jpg

untitled_design.jpgBlogs