AI is gaining ground in education on two fronts: faculty are experimenting with it to create teaching materials and manage workload, and learners are using it for research and writing support. Surveys from the Digital Education Council confirm this shift, showing majorities in both groups already engaging with AI.

Yet the same research highlights deep concerns about fairness, ethics, and reliability, the very risks that institutions must confront if they want to guide AI’s role in learning.

This mix of momentum and hesitation—the AI conundrum—was the focus of our recent APAC webinar, Introducing IgniteAI: Secure, in-context AI for education. In this blog, we’ll explore what that conundrum looks like and why it matters for education leaders today.

What are faculty really doing with AI?

According to the Digital Education Council Global AI Faculty Survey 2025, 61 percent of educators are already incorporating AI into their teaching. The most common applications are practical: creating teaching materials (75 percent), supporting administrative tasks (58 percent), boosting learner engagement (45 percent), and generating feedback for assignments (28 percent).

For education leaders, these findings highlight more than trends. They show that AI is moving into everyday teaching practice. The real challenge is determining how institutions should guide and govern its use.

Why are educators still cautious about AI?

The survey revealed that 72 percent of educators worry about fairness, ethics, and institutional risk, while 58 percent flagged issues with accuracy and reliability.

As Melissa Loble, Instructure’s Chief Academic Officer, explained during the webinar, "Reliability and trust are big hurdles. We need to ensure AI is an assistive tool, not a replacement tool. Human oversight is essential."

Educators are also contending with a fragmented marketplace. With dozens of AI tools available, it’s often unclear which are secure, how the tools align with institutional priorities, and whether they genuinely solve problems rather than create new ones.

For leaders, the takeaway is clear: effective AI adoption isn’t about rushing to deploy the latest tool, but about creating an environment where educators can trust the technology, where risks are managed, and where the human role in teaching and learning is reinforced.

What does the AI conundrum look like in practice?

The conundrum comes into play when adoption moves faster than governance. Educators and learners are experimenting with AI every day, but institutions have to weigh enthusiasm against risks like fairness, reliability, and trust. Leaders are caught between wanting to encourage innovation and needing to protect academic integrity.

Some universities in the Asia-Pacific region are showing how to navigate this balance.

- RMIT University in Melbourne created Val GenAI, a private and secure AI assistant for staff and learners. Val includes custom personas designed for academic support, practice quizzes, essay feedback, and prompt-crafting guidance. The initiative grew from recognition that learners were already experimenting with AI, so the university built a tool aligned with its teaching values.

- The University of Sydney piloted Cogniti, an educator-built platform that allows faculty to create guided AI “doubles” enriched with course materials and rubrics. Cogniti is institutionally hosted and has already been adopted by more than 20 institutions worldwide. It shows how AI can be developed within institutions to serve their own teaching goals.

These examples matter because they demonstrate how the conundrum can be addressed in practical ways: shaping AI around educational priorities instead of letting external tools set the agenda.

How Instructure is responding to the AI conundrum

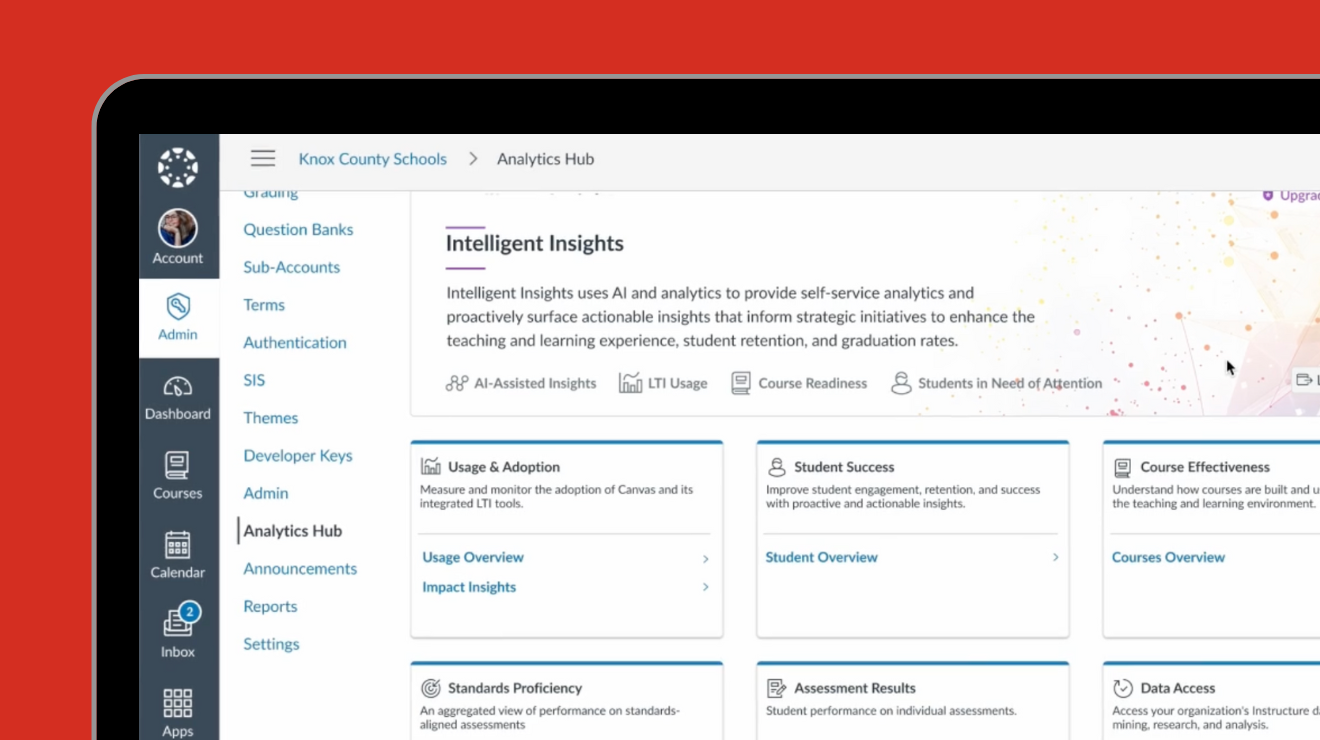

Our focus isn’t on duplicating what specialist tools already do well, such as Grammarly, but on providing targeted solutions that directly support educators and learners. That’s why we’ve introduced IgniteAI, our secure, in-context AI for education, built right within the Canvas ecosystem.

Melissa explained our approach during the webinar: "We are not choosing to deploy AI for the sake of AI. We are employing AI tools that drive value, solving challenges educators have today."

The three principles shaping IgniteAI are:

- Confidence: Tools are vetted, secure, and transparent, with “nutrition facts” that show how models use data.

- Adoption: Faculty-first design ensures tools are intuitive and embedded into existing workflows, encouraging meaningful use.

- Value: Features such as rubric generation, discussion insights, and content accessibility remediation are designed to address challenges educators identified as priorities.

Because, as I emphasised during the webinar, "Learning should drive the technology. Technology shouldn’t drive the learning." You can also learn more about our approach to AI here.

What the AI conundrum means for education leaders

The decisions that leaders make today will shape how AI supports learning tomorrow. Faculty and learners are already experimenting, and institutions that wait too long risk being left behind. But rushing in without safeguards brings its own risks, including a loss of trust and a weaker learning experience. The challenge isn’t whether to engage with AI, but how to do it in ways that strengthen teaching and protect equity.

That’s why a deliberate, education-first approach is essential. By grounding AI use in pedagogy, transparency, and educator needs, leaders can ensure it enhances teaching and learning rather than distracting from it. And that’s exactly the purpose of IgniteAI: bringing secure, in-context AI into Canvas in ways that educators can trust and use with confidence.