AI that puts people first.

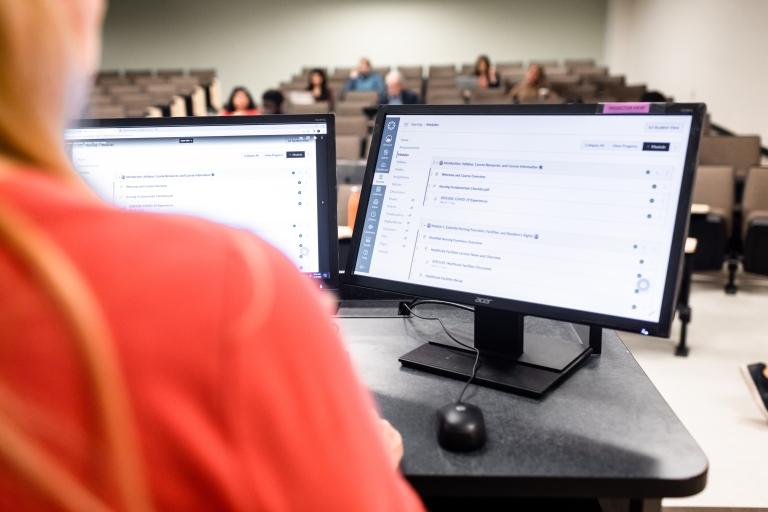

We love new technology—it's part of who we are. We also care deeply about using it responsibly. That’s why we work closely with our customers and partners to develop AI tools that support learners and educators.

AI is here to stay. So are our principles for responsible use.

Amplify human connection

Connection drives comprehension—and our mission will always be to build the world’s best tools for learning together.

Privacy and security

Student privacy and data security guide everything we do. And we never use customer data for AI systems without permission—ever.

Ethical, strategic implementation

We prioritise academic integrity, and encourage customers to adopt AI training for faculty and ethical-use standards for students.

Our standards for internal AI use

We’re always open about how, where, and when AI is used in our products and services via AI Nutrition Facts.

AI is only as reliable as the data it’s given, which is why we work proactively to identify and eliminate biases in AI development.

We recognize AI has limitations, and believe it’s best used as a tool to augment human decision-making.

We train our own employees on responsible AI development, and seek out partners who do the same.

We make intentional design choices that safeguard privacy for both individuals and institutions.

AI Resources

Blogs

Instructure’s Generative AI Innovations Aligned with US Department of Education, UNESCO & Other Global Policy Guideline

Blogs

The Top Five Ways AI Will Impact Teacher-Student Relationships

Ebooks & Buyer’s Guides

AI Foundational Guide for K-12 Leaders and Educators

Ebooks & Buyer’s Guides

AI Foundational Guide for Higher Education

Read more about how we use AI.

Read more about how we use AI.

Or have your virtual AI assistant do it, if you want to get really ironic.